Hello again! After my first post where I shared why I decided to start this blog, today I want to share a new adventure I've embarked on: exploring Artificial Intelligence models locally with Docker Model Runner.

You see, my trusty work companion is a MacBook Pro with an Apple M1 Pro chip and 16GB of RAM. A powerful machine, yes, but what I've seen in reading is that when it comes to playing with AI models, especially the larger ones, things can sometimes get a bit... slow, or even impossible. That's why I started looking for alternatives that would give me the flexibility to experiment with different models without relying completely on cloud resources. While cloud resources are very useful, they can sometimes come with costs or limitations when you just want to try something new anytime.

I'm a learner in this fascinating world of AI, so if I say anything that's not completely accurate, I ask for your understanding in advance! I'm here to learn and share what I discover.

The good news is that, since Docker Desktop version 4.40 and later, we have a very interesting tool called Docker Model Runner. This opened up a new world of possibilities for me to tinker with models directly on my machine.

First Steps with Docker Model Runner

Here's a very basic guide on how I started setting it up:

- Make sure you have Docker Desktop installed and updated: As I mentioned, version 4.40 or higher is needed.

- Run the command

docker model statusfrom the terminal. And you should see: Docker Model Runner is running. - Explore available models: Docker Hub is the place where you can find different ready-to-use models.

- "Pull" the model: Once you find a model you're interested in, you can use the

docker model pull [model name]command in your terminal. For example:docker model pull ai/qwen2.5:0.5B-F16.

My First Experiment: Qwen2.5 0.5B-F16

For my initial exercise, I decided on the ai/qwen2.5:0.5B-F16 model. The reason? Well, being a relatively small model (0.5 billion parameters and in 16-bit format), I hoped it could offer decent performance on my M1 Pro while allowing me to start understanding how the interaction works. But pay attention! This is just the beginning, and my idea is to keep trying other models to see how they perform on my machine. Additionally, I want to learn what each of these models is used for and in which case you could get the most benefit from them.

Interacting with the Models

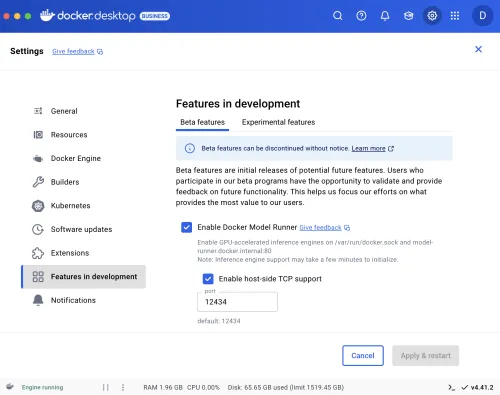

Once the model is downloaded, Docker Model Runner facilitates interaction through an API. To start, I enabled the "Enable host-side TCP support" option in Features in development.

You can also run models directly from your terminal using docker model run. For instance, to get a quick response:

docker model run ai/qwen2.5:0.5B-F16 "Hello, who are you?"

Or, to enter an interactive chat mode with the model, simply run:

docker model run ai/qwen2.5:0.5B-F16

This will allow you to type multiple prompts and get responses in a conversational flow directly in your terminal.

Alternatively, to see which models are available through the llama.cpp engine (which Docker Model Runner uses for certain models), we can use curl to query the local API:

curl http://localhost:12434/engines/llama.cpp/v1/models

This should return a list of the models you have downloaded and that the engine can use.

For more complex chat interactions or to integrate with other applications, you can use the API endpoint. To interact with a chat model, like the one I chose (which supports this functionality), we can use the following structure to send messages and get responses:

curl http://localhost:12434/engines/llama.cpp/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "ai/qwen2.5:0.5B-F16",

"messages": [

{"role": "user", "content": "Hello, how are you?"}

]

}'This command sends a message to the ai/qwen2.5:0.5B-F16 model and expects a response. The JSON structure is quite intuitive, with roles for the user and the assistant (the model).

Looking Towards the Future

I'm very excited about the possibilities that Docker Model Runner opens up. I hope this tool allows me to continue deepening my study of Artificial Intelligence, experimenting with more advanced concepts like content rendering, RAG (Retrieval-Augmented Generation) architecture, and who knows, maybe in the not-too-distant future, venture to create a contributed module as an AI provider for Drupal.org that supports Docker Model Runner models. This provider would be of interest to developers. I've realized that as of today, there isn't one available. It would be a challenging but very interesting project!

For now, I'll keep exploring and sharing my learnings here. I hope this small introduction to Docker Model Runner has been interesting!